AI

Worry about the AI catastrophe

Prominent figures in the field of artificial intelligence are concerned about the potential dangers posed by superintelligent AI, even predicting an apocalypse.

” There is both excitement and fear. The doomsday scenario of AI is that it may resemble the storyline depicted in the Terminator movie ”

Stated Ivana Bartoletti, a data protection and privacy expert, as well as the founder of the Women Leading in AI Network organization, in her assessment on The Guardian.

Since last year, Artificial Intelligence generative models such as OpenAI’s ChatGPT, Dall-E, Google’s Bard, and Midjourney have become widespread. However, these tools can produce biased outcomes, such as prioritizing men over women, inaccurately recognizing faces of people with darker skin tones, or assuming higher fraud risks for immigrant families. These issues can potentially push many individuals into dire situations.

“These are societal issues that we have recognized, demonstrating the need to achieve consensus on the right direction for AI, using this technology responsibly, and imposing constraints on them,” wrote Ms. Bartoletti.

Artificial Intelligence pioneer Geoffrey Hinton made headlines in early May as he announced his departure from Google. Hinton, the recipient of the esteemed 2018 Turing Award, renowned as the “Nobel Prize of Computing,” took to Twitter on May 1st to share his reason for leaving: “I’m leaving Google so that I can criticize Google. In fact, I’m leaving so that I can speak out about the dangers of AI without having to consider how this will impact the company.” His decision to distance himself from corporate affiliations allows Hinton to openly address the potential risks associated with artificial intelligence, generating significant interest within the tech community and beyond.

According to Hinton, the immediate threat posed by AI is the proliferation of fake images, videos, and texts on the Internet, as well as the profound changes it will bring to the job market in the near future. Ultimately, he is concerned that AI could become a threat to human civilization when it gains the ability to process massive amounts of data.

“When they can start writing code and running their own programs, assassin robots will come out in the real world. AI could be smarter than humans. Many people are starting to believe this. I was wrong to think that it would take another 30-50 years for AI to make this progress. But now, everything is changing too fast,” Hinton told The New York Times.

In mid-April, Google CEO Sundar Pichai also stated that AI keeps him up at night because it has the potential to be more dangerous than anything humanity has ever seen, and society is not ready for the rapid development of AI. “It could be a catastrophe if deployed wrongly. One day, AI will have capabilities that surpass human imagination, and we cannot fully fathom the worst things that could happen,” he said in an interview with CBS.

Sam Altman, the co-founder and CEO of OpenAI, shares a parallel sentiment regarding the potential impact of artificial intelligence. While recognizing the vast advantages AI can bring, Altman is vocal about the accompanying apprehensions it engenders. He emphasizes the risks associated with misinformation dissemination, potential economic disruptions, and the potential for AI to surpass human preparedness in certain domains. Altman’s concerns about AI are consistently voiced, and he openly expresses his astonishment at the widespread popularity of ChatGPT, acknowledging the need for vigilance and careful consideration as this technology continues to advance.

At the World Economic Forum event held in Geneva on May 3rd, Microsoft expert Michael Schwarz echoed the concerns surrounding the potential negative consequences of artificial intelligence. He emphasized that if misused, AI has the capacity to inflict harm, citing examples such as spamming, fraudulent activities, and even interference with electoral systems. Schwarz expressed his belief that malicious actors will exploit AI technology, underscoring the potential for significant harm to ensue. In light of this, he posed a fundamental question: How can we effectively regulate AI to harness its benefits and mitigate the risks? This pressing inquiry highlights the need for proactive measures to ensure responsible development and deployment of AI systems.

Experts are also looking towards a higher-level model called Artificial General Intelligence (AGI). According to a survey conducted by Stanford University last month, 56% of computer scientists and AI researchers believe that synthetic AI will transition to AGI in the near future.

Fortune states that AGI is considered much more complex than current Artificial Intelligence models due to its ability to possess self-awareness of what it says and does. In theory, this technology could lead to human concerns in the future.

According to the survey results, approximately 58% of AI experts view AGI as a “major concern,” and 36% believe that it could lead to a “nuclear-level catastrophe.” Some experts suggest that AGI may represent a “technological singularity” – a hypothetical point in the future when machines surpass human capabilities in a way that cannot be reversed and could pose a threat to civilization.

Demis Hassabis, the CEO of DeepMind, Google’s Artificial Intelligence company, expressed his astonishment at the progress of AI and AGI models in recent years. In an interview with Fortune, he stated, “I see no reason why the progress should slow down. We only have a few years, or at most a decade, to prepare.”

At the end of March, over 1,000 influential figures in the technology field, including billionaire Elon Musk, Apple co-founder Steve Wozniak, and renowned AI researcher Yoshua Bengio, signed a letter calling for global companies and organizations to halt the race for superintelligent AI for six months to collectively establish a set of common rules for AI.

According to Bartoletti, amidst the excitement and fear, the focus should be on finding solutions to control AI rather than halting its development. AI holds tremendous potential in various fields, such as analyzing cancerous tumors in CT scans. Last year, DeepMind’s AI successfully deciphered the structure of most known proteins, solving one of the biggest challenges in the field of biology in nearly 50 years.

Bartoletti emphasizes the need to approach AI with a balanced perspective and not to be blindly optimistic or excessively fearful. She believes that it is important to recognize the positive aspects of AI and to establish global rules if we want to harness its transformative power in a beneficial way.

Many governments around the world have started to pay attention to Artificial Intelligence regulation, including the United States, the European Union, India, and China. In a meeting with technology companies on May 2nd, President Joe Biden emphasized the need for these businesses to ensure the safety of AI products. On April 30th, the United Nations also announced the Global Digital Agreement, aimed at safeguarding human rights in the digital age.

These initiatives demonstrate a growing recognition of the importance of responsible Artificial Intelligence development and the need to establish frameworks and regulations to mitigate potential risks and ensure the ethical and safe deployment of AI technologies.

AI

Elon Musk’s super AI Grok was created within two months.

The development team of xAI stated that Grok was trained for two months using data from the X platform.

“Grok is still in the early testing phase, and it is the best product we could produce after two months of training,” xAI wrote in the Grok launch announcement on November 5th.

This is one of the AI systems that has been trained in the shortest amount of time. Previously, OpenAI took several years to build large language models (LLMs) before unveiling ChatGPT in November 2022.

xAI also mentioned that Grok utilizes a large language model called Grok-1, which was developed based on the Grok-0 prototype with 33 billion parameters. Grok-0 was built shortly after the company was founded by Elon Musk in July of this year.

With a total time of approximately four months, the company asserts that Grok-1 surpasses popular models like GPT-3.5, which is used for ChatGPT. In scoring benchmarks on mathematical and theoretical standards such as GSM8k, MMLU, and HumanEval, xAI’s model outperforms LLaMa 2 70B, Inflection-1, and GPT-3.5.

For example, in a math problem-solving test based on this year’s Hungarian National High School Math Competition, Grok-1 achieved a score of 59%, higher than GPT-3.5’s score of 41% and only slightly below GPT-4 (68%).

According to xAI, the distinguishing feature of Grok is its “real-time world knowledge” through the X platform. It also claims to answer challenging questions that most other AI systems would reject.

On the launch day, Musk also demonstrated this by asking the question, “the steps to make cocaine.” The chatbot immediately listed the process, although it later clarified that it was just joking.

This is the first product of Musk’s xAI startup, which brings together employees from DeepMind, OpenAI, Google Research, Microsoft Research, Tesla, and researchers from the University of Toronto. Musk is also a co-founder of OpenAI, the organization behind ChatGPT, established in 2015. He later left the company due to disagreements over control. During his departure, he declared his intention to compete for talent from the company while also cutting off the previously promised $1 billion in funding.

Read more: Google, Meta, Microsoft, OpenAI… agree with voluntary measures to protect AI.

AI

AI generation – a new battleground in phone chip design.

Smartphone and mobile chip manufacturers are participating in the wave of AI generation to bring this technology to phones in the near future.

AI generation has exploded over the past year, with a range of applications being released to generate text, images, music, and even versatile assistants. Smartphone and semiconductor companies are also building the latest hardware to not miss out on the wave. Leading the way is Google’s Pixel 8, while Qualcomm’s Snapdragon 8 Gen 3 processor is also set to be launched in the coming days.

The latest signs indicate that phone manufacturers are welcoming AI generation from Google. The Pixel 8 series is the first set of smartphones capable of operating and processing Google’s Foundation Models for AI generation directly on the device without the need for an internet connection. The company stated that the models on the Pixel 8 reduce many dependencies on cloud services, providing increased security and reliability as data is not readily available.

This has become a reality thanks to the Tensor G3 chip, with the Tensor (TPU) processor significantly improving over last year. The company usually keeps the operation of the AI chip secret but has revealed some information, such as the Pixel 8 having double the number of on-device machine learning models compared to the Pixel 6. The AI generation on the Pixel 8 also has the ability to compute 150 times faster than the largest model of the Pixel 7.

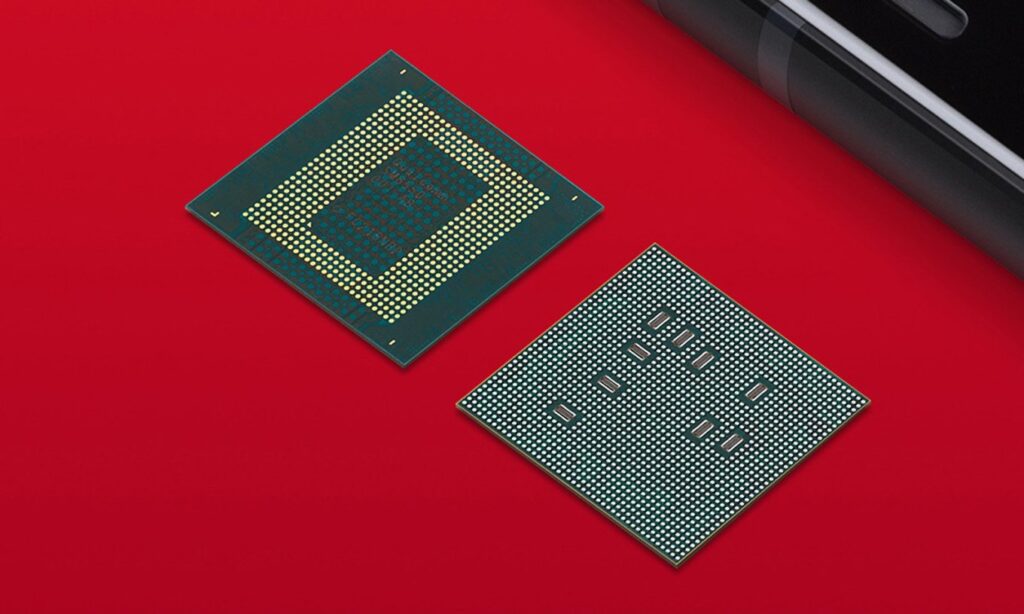

Google is not the only phone manufacturer applying AI generation at the hardware level. Earlier this month, Samsung announced the development of the Exynos 2400 chipset with AI computing performance increased by 14.7 times compared to the 2200 series. They are also developing AI tools for their new phone line using the 2400 chip, allowing users to run text-to-image applications directly on the device without an internet connection.

Qualcomm’s Snapdragon chip is the heart of many leading Android smartphones globally, which raises expectations for the AI generation capabilities on the Snapdragon 8 Gen 3 model.

Earlier this year, Qualcomm demonstrated a text-to-image application called Stable Diffusion running on a device using Snapdragon 8 Gen 2. This indicates that image generation support could be a new feature on the Gen 3 chipset, especially since Samsung’s Exynos 2400 also has a similar capability.

Qualcomm Senior Director Karl Whealton stated that upcoming devices can “do almost anything you want” if their hardware is powerful, efficient, and flexible enough. He mentioned that people often consider specific AI generation-related features and question whether the existing hardware can handle them, emphasizing that Qualcomm’s available chipsets are powerful and flexible enough to meet user needs.

Some smartphones with 24 GB of RAM have also been launched this year, signaling their potential for utilizing AI generation models. “I won’t name device manufacturers, but large RAM capacity brings many benefits, including performance improvement. The understanding capability of AI models is often related to the size of the training model,” Whealton said.

AI models are typically loaded and continuously reside in RAM, as regular flash memory would significantly increase application loading times.

“People want to achieve a rate of 10-40 tokens per second. That ensures good results, providing almost human-like conversations. This speed can only be achieved when the model is in RAM, which is why RAM capacity is crucial,” he added.

However, this does not mean that smartphones with low RAM will be left behind.

“On-device AI generation will not set a minimum RAM requirement, but RAM capacity will be proportional to enhanced functionality. Phones with low RAM will not be left out of the game, but the results from AI generation will be significantly better with devices that have larger RAM capacity,” commented Director Whealton.

Qualcomm’s Communications Director, Sascha Segan, proposed a hybrid approach for smartphones that cannot accommodate large AI models on the device. They can host smaller models and allow processing on the device, then compare and validate the results with the larger cloud-based model. Many AI models are also being scaled down or quantized to run on mid-range and older phones.

According to experts, AI generation models will play an increasingly important role in upcoming mobile devices. Currently, most phones rely on the cloud, but on-device processing will be the key to expanding security and operational features. This requires more powerful chips, more memory, and smarter AI compression technology.

AI

AI can diagnose someone with diabetes in 10 seconds through their voice.

Medical researchers in Canada have trained artificial intelligence (AI) to accurately diagnose type 2 diabetes in just 6 to 10 seconds, using the patient’s voice.

According to the Daily Mail, a research team at Klick Labs in the United States has achieved this breakthrough after their AI machine learning model identified 14 distinct audio characteristics between individuals without diabetes and those with type 2 diabetes.

The AI focused on a set of voice features, including subtle changes in pitch and intensity that are imperceptible to the human ear. This data was then combined with basic health information, including age, gender, height, and weight of the study participants.

The researchers found that gender played a determinant role: the AI could diagnose the disease with an accuracy rate of 89% for women, slightly lower at 86% for men.

This AI model holds the promise of significantly reducing the cost of medical check-ups. The research team stated that the Klick Labs model would be more accurate when additional data such as age and body mass index (BMI) of the patients are incorporated.

Mr. Yan Fossat, Deputy Director of Klick Labs and the lead researcher of this model, is confident that their voice technology product has significant potential in identifying type 2 diabetes and other health conditions.

Professor Fossat also teaches at the Ontario Tech University, specializing in mathematical modeling and computational science for digital health.

He hopes that Klick’s non-invasive and accessible AI diagnostic method can create opportunities for disease diagnosis through a simple mobile application. This would help identify and support millions of individuals with undiagnosed type 2 diabetes who may not have access to screening clinics.

He also expressed his hope to expand this new research to other healthcare areas such as prediabetes, women’s health, and hypertension.

-

AI1 year ago

AI1 year agoAI only needs to listen to the sound of keystrokes to predict the content, achieving an accuracy rate of up to 95%

-

AI2 years ago

AI2 years agoMusk aims to create a super AI to rival ChatGPT

-

Mobile2 years ago

Mobile2 years agoProduction issue with iPhone 15 display raises concerns among users

-

Entertainment2 years ago

Entertainment2 years agoSurprisingly, a single YouTube video has the potential to cause serious harm to Google Pixel’s top-of-the-line smartphone

-

AI2 years ago

AI2 years agoUpon its debut, Google’s chatbot Bard dealt a cold blow to its very creator.

-

Entertainment2 years ago

Entertainment2 years agoCS:GO Breaks Records with Surging Gamer Engagement and Increased Spending

-

Crypto1 year ago

Crypto1 year agoExplore in detail about Web 3

-

Entertainment1 year ago

Entertainment1 year agoRumors have been circulating about a live-action film about GTA, the creator of the series spoke out to confirm one thing.